Yves Brise

Partner

Unternehmen aller Grössenordnungen versuchen, den geschäftlichen Wert ihrer Daten zu ermitteln, um wettbewerbsfähig zu bleiben. Sogenannte Data-Driven Businesses sind bereits jetzt in der Lage, präzisere Entscheidungen zu treffen, indem sie alle Arten von Daten sofort und sicher zur Verfügung stellen, damit sie im gesamten Unternehmen verarbeitet und analysiert werden können.

Autoren: Yves Brise & Fabio Hecht

Bis vor kurzem war es für Unternehmen eine enorme Herausforderung, grosse Datenmengen (mehreren Millionen Nachrichten pro Sekunde) in Echtzeit auf einer einzigen Plattform verfügbar zu machen. Dies ändert sich jedoch.

Apache Kafka ist eine quelloffene, hochverfügbare, Cloud-native, horizontal skalierbare Echtzeit-Streaming-Plattform für Unternehmen, die dieses Problem löst. Sie ist einerseits bei Entwicklern beliebt wegen dem eleganten Design, den APIs, dem Toolset und dem Support. Andererseits findet Kafka beim Business grossen Anklang dank der Möglichkeiten, die sich durch das Sammeln und Analysieren grosser Datenmengen in Echtzeit eröffnen.

In seinem Blogbeitrag erklärt David Konatschnig was Kafka ist, zeigt den Business Value der Plattform auf und weist auf die Unterschiede zu anderen Messaging-Systemen hin. In diesem Blog-Artikel veranschaulichen wir drei Gründe, warum Unternehmen Kafka als Streaming-Plattform für ihre datengesteuerte Transformation einsetzen sollten.

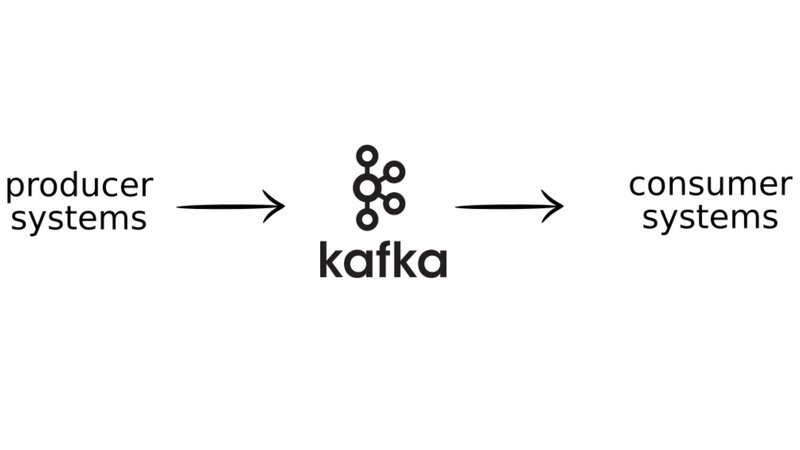

Im Kern ist Kafka ein Pub-Sub-Messaging-System. Es skaliert besonders gut, unterstützt eine grosse Anzahl von Produzenten und Konsumenten und neue Server erhöhen die Kapazität fast linear. Deshalb ist es möglich, in vielen Fällen mehrere Millionen Nachrichten pro Sekunde zu verarbeiten.

Seine Sicherheitsfunktionen unterstützen die Verschlüsselung von Daten und die Durchsetzung von Zugriffskontrollrichtlinien. Somit kann es als zentrale Messaging-Plattform für das gesamte Unternehmen eingesetzt werden.

Diese beiden Eigenschaften (Skalierbarkeit und Sicherheit) ermöglichen es Kafka, veraltete und schlecht skalierende MQ-Systeme, deren Wartung kostspielig ist, zu ersetzen, um Komponenten zu entkoppeln und Nachrichten zwischenzuspeichern (siehe Abb. 1).

Wenn das Geschäft wächst, Technologien sich entwickeln und Ereignisse wie Akquisitionen und Fusionen auftreten, müssen sich Unternehmen schnell und kostengünstig anpassen. Dies kann erreicht werden, indem Daten aus Informationssilos — einschliesslich Legacy-Systemen — extrahiert werden, um sie auf einer Cloud-nativen, Microservice-basierten Weise verfügbar zu machen, ohne bestehende Kernsysteme zu ändern. Solch ein Prozess ist wichtig beim Zerkleinern grosser, monolithischer Anwendungen in kleinere, agile Teile.

Mit Kafka Connect können Daten aus einer Vielzahl von Systemen von und nach Kafka übertragen werden. Die unterstützten Systeme reichen von relationalen Datenbanken über Messaging-Systeme bis hin zu Cloud- und mobilen Apps. Sowohl Batch- als auch Echtzeit-Datenintegrationsmodi werden unterstützt (siehe Abb. 2).

Datenstreaming in Kafka ist häufig konfigurativ mit Kafka Connect möglich (hier eine Liste der verfügbaren Konnektoren). Allerdings können dank der modernen Architektur, Dokumentation und Verfügbarkeit von Open-Source-Konnektoren relativ einfach neue Konnektoren entwickelt werden.

Dies macht Kafka zu einer geeigneten Plattform auch für folgende Anwendungsfälle:

Data-driven Business bedingt, dass Daten nicht nur im gesamten Unternehmen gesammelt und zur Verfügung gestellt, sondern auch analysiert werden. Daten, die durch Kafka fliessen, können sowohl mit Kafka-Tools als auch mit externen Tools analysiert werden.

Kafka-eigene Tools umfassen die Kafka Streams API, eine Java-Bibliothek, die auf erfahrene Entwickler abzielt und KSQL, eine Ausdrucksform für Datenanalysten. Der Vorteil der Analyse von Daten innerhalb von Kafka besteht darin, dass kein zusätzliches System betrieben werden muss. Es kann garantiert werden, dass alle Ereignisse genau einmal bearbeiten werden (exactly-once semantics).

Bei Bedarf können Daten auch von externen Datenanalysesystemen (nativ wenn unterstützt, oder über Kafka Connect) genutzt werden, die in einem Unternehmen bereits etabliert sind oder spezifische Probleme lösen können (siehe Abb. 3).

Kafka ist eine wichtige Komponente im datengetriebenen Unternehmen, weil es Daten für das gesamte Unternehmen in Echtzeit sicher verfügbar macht. Zudem kann Kafka als Messaging-Plattform genutzt werden, um Softwarekomponenten zu entkoppeln und die Innovationsgeschwindigkeit zu erhöhen. Es kann auch Daten aus Altsystemen retten, ohne die bewährten und funktionierenden Systeme zu beeinträchtigen, die schwierig zu ändern sind. Schliesslich können verschiedene Fachabteilungen auf spartenübergreifende Daten zugreifen und diese analysieren, um datengesteuerte Erkenntnisse zu generieren.