Yu Li

Principal Architect, Director

Cloud-native Anwendungen lassen sich in Containern oder auch Serverless entwickeln. Dieser Beitrag erläutert die jeweiligen Vor- und Nachteile.

Autor: Yu Li

Mit der Cloud-native Entwicklung von Anwendungen werden die Vorteile von Cloud-Computing umfänglich genutzt. Dabei geht es nicht darum, wo Anwendungen entwickelt werden, sondern wie. Es stellt sich folglich die Frage, wie Cloud-native entwickelt werden soll: mittels Container-Technologien oder Serverless Functions?

Container werden bereits von vielen Unternehmen eingesetzt und erfreuen sich immer grösserer Beliebtheit. Auch Serverless findet immer mehr Akzeptanz, dabei ist der Cloud-Provider für die Ausführung von Codes verantwortlich.

Je nach Projekt eignet sich der eine Ansatz besser als der andere. Dabei ist es entscheidend die beiden Ansätze und deren Vor- sowie Nachteile zu verstehen. Diesbezüglich soll der nachfolgende Beitrag helfen.

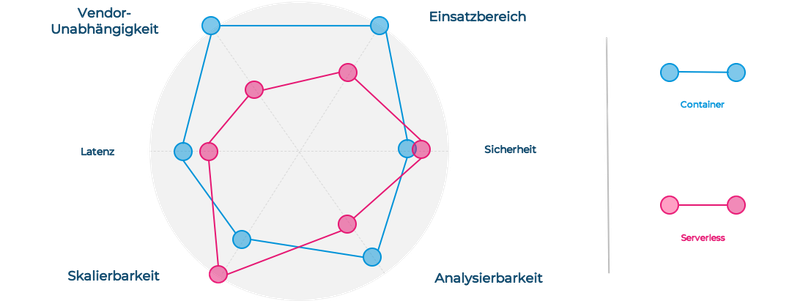

Dieser Blog vergleicht die beiden Ansätze anhand von sechs Aspekten (Abbildung 1). Die zu vergleichenden Aspekte sind jedoch immer an das Projekt und die Kundensituation anzupassen.

Die Basistechnologien zum Betrieb und der Orchestrierung des Containers werden von jedem Cloud-Anbieter angeboten (z.B. Microsoft Azure Kubernetes Services oder Google Kubernetes Engine). Die Container-basierten Lösungen können leicht zwischen Cloud-Providern portiert werden.

Serverless-basierte Lösungen hingegen können nicht so einfach zwischen Cloud-Providern migriert werden. Dies aufgrund der Cloud-Provider-spezifischen Implementierung von Serverless-Produkten wie AWS Lambda und Azure Functions. Für die Migration einer AWS Lambda Function zu einer Azure Function müssten so beispielsweise die Trigger (z.B. HTTP-Trigger, Queue-Trigger, Datenbank-Trigger) neu geschrieben werden.

Skalierbarkeit ist die Stärke von Serverless. Serverless Functions werden automatisch je nach Nachfrage beliebig skaliert, wobei die Skalierung vollständig von den Cloud-Providern vorgenommen wird. Dies spart administrativen Aufwand und reduziert Komplexität.

Container können auch bedarfsabhängig skalieren. Im Gegensatz zu Serverless liegt die Container-Skalierung in der Eigenverantwortung.

Serverless skaliert automatisch. Allerdings muss die Latenzzeit der Skalierung vom Ist-Zustand zum Soll-Zustand berücksichtigt werden. Wenn die Serverless Function beim Aufruf nicht bereit ist, tritt das sogenannte «Cold Start» Problem auf. «Cold Start» bedeutet hier, dass es zu einer Latenz zwischen dem Aufruf und der Ausführung einer Function kommt. Dies hat grossen Einfluss auf die User Experience. Gegen «Cold Start» gibt es verschiedene Umgehungslösungen, wie Scheduled Pingers, Retry Approach oder Pre-Warmer. Cloud Provider wie Microsoft Azure bieten z.B. Premium Tier of Azure Function ohne Code-Start-Limitierung an.

Die Container-basierte Lösung hat weniger «Cold Start» Probleme, da sie in der Regel nicht auf null Replicas zurück skaliert wird. Das «Scale Up» hingegen muss in Betracht gezogen werden. Kubernetes skaliert typischerweise entsprechend der CPU- oder Memory-Nutzung. Bei Event-basierten Architekturen reagiert Kubernetes oft zu spät, da die Anzahl der ausstehenden Nachrichten in der Queue keinen Einfluss auf die Skalierung hat. Zu diesem Zweck treibt der Cloud-Provider wie Microsoft Azure aktiv innovative Lösungen wie KEDA [1] voran. KEDA ermöglicht die fein-granulare Auto-Skalierung für Event-getriebene Kubernetes-Workloads.

Zusammenfassend gibt es Herausforderungen in Bezug auf die Latenz sowohl bei Serverless als auch bei Containern. Es gibt unserer Meinung nach ausgereiftere Lösungen für Container, als für Serverless. Da ein Vergleich hier schwierig ist, sollte man sich darauf konzentrieren die Herausforderungen zu verstehen und die Lösungen zu einem früheren Zeitpunkt in einem Projekt zu klären.

Im Container-Ecosystem gibt es umfangreiche Tools in Bezug auf die Analyse von Containern. Bei Serverless wird jedoch die Runtime durch den Cloud-Provider verwaltet, welche zusätzliche Herausforderungen in der Performance-Analyse mit sich bringen. Ohne die Möglichkeit, Agenten zu installieren, um Metriken direkt von der Laufzeit abzurufen, muss man sich vollständig auf die Möglichkeiten der Cloud-Plattformen verlassen. Azure Monitoring bietet zum Beispiel Analyse-Funktionen mit Metriken für Azure Functions.

Der Einsatz von Containern hat sich in den letzten Jahren stark verbreitet und wird in der Software-Entwicklung breit eingesetzt. Container weisen jedoch auch gewisse Einschränkungen auf. So können Container für Event-basierte Anwendungen nicht fein-granular skaliert werden, denn Container skalieren nach CPU- sowie Speichernutzung und nicht nach Events.

Serverless-Technologien sind in der Lage von null bis zu Spitzenlast zu skalieren. Bei grossen Mengen von Events stellt der Cloud-Provider sicher, dass die Serverless Functions schnell hoch skalieren, um die Events verarbeiten zu können. Daher eignen sich Serverless-Anwendungen besonders für Event-basierte Architekturen und Geschäftsanwendungen mit Spitzenzeiten.

Mit Serverless wird mehr Sicherheits-Verantwortung an den Cloud-Provider übergeben. Sicherheitsthemen, wie Kubernetes Security, Image Vulnerability, Registry Security, welche bei Containern adressiert werden müssen, sind hier weniger relevant.

Hingegen müssen einige neue Herausforderungen berücksichtigt werden. Bei Serverless ist jede Function ein Perimeter. D.h., dass Sicherheitsschwachstellen für jede Function einzeln getestet werden müssen. Serverless Functions sind statuslos, somit müssen zustandsrelevante Informationen ausserhalb gespeichert werden (z.B. Session-ID). Dies bringt neue Anforderungen an die Datensicherheit.

Durch den Vergleich von Serverless und Container erhält man ein gutes Bild von deren Stärken und Schwächen. Zusammenfassend lässt sich sagen, dass Container sehr breit eingesetzt werden können und umfassende Lösungen für die aufkommenden Herausforderungen bieten. Serverless Functions bringen den Vorteil der Skalierung und gleichzeitig die Herausforderungen, wie Vendor Lock-in, Latenz, Analysefähigkeit, mit sich. Im Vergleich zu Containern haben Serverless Functions ein engeres Einsatzgebiet, welche sich besser für Event-basierte Architekturen und Anwendungen mit hohen Lastspitzen eignen.